The HyperText Markup Language standardizes the creation of two dimensional (2D) web pages out of text, two dimensional images, and simple user controls such as buttons and text boxes. HTML was created without considering any three dimensional (3D) location for the information produced. HTML syntax formats informational objects using short text strings called tags.

On the other hand, the Virtual Reality Modeling Language (VRML) was created specifically to standardize 3D content for the web. Any object created using VRML has a location in 3D space. A user of a VRML viewer walks into a VRML scene using a mouse or keyboard and navigates as she does in the real world. VRML syntax formats informational objects using short text strings called node identifiers.

VRML includes nodes for managing text strings in three dimensional space. These VRML nodes are very primitive compared with the richness and diversity of the HTML tags created specifically for advanced text formatting and presentation. It does not make sense to reinvent ASCII string formatting in the VRML standard. Instead, I suggest extending the Appearance node of the VRML 2 standard to define how to open an HTML document at a three dimensional location within a VRML scene. This paper reviews the most popular techniques web authors use to coexist HTML and VRML on a web site. Then, it suggests a way to incorporate HTML into the VRML standard as a natural extension of VRML's three dimensions. Consolidating HTML and VRML would simplify web browsers and provide a framework for immersive web navigation where the computer screen no longer exists.

#VRML V2.0 utf8

DEF in_front Viewpoint {

position 0 -500 0

orientation 1 0 0 1.57

fieldOfView 0.785398

description "IN_FRONT"

}

NavigationInfo {

type "EXAMINE"

}

DEF DRAWER0 Transform {

children [

DEF TS1 TouchSensor {}

DEF DRAWER Transform {

children [

DEF BOTTOM Transform {

children [

DEF PLANK Shape {

appearance Appearance {

material Material {diffuseColor 0 .2 0}

}

geometry Box {size 1 1 1}

}

]

scale 100 200 2

translation 0 0 0

},

DEF BACK Transform {

children USE PLANK

scale 100 2 80

translation 0 100 40

},

DEF FRONT Transform {

children USE PLANK

scale 100 2 80

translation 0 -100 40

},

DEF RIGHT Transform {

children USE PLANK

scale 2 200 80

translation 50 0 40

},

DEF LEFT Transform {

children USE PLANK

scale 2 200 80

translation -50 0 40

},

]

}

DEF FOLDER Transform {

children [

DEF TS3 TouchSensor {}

DEF PAGE0 Transform {

children [

DEF PAGE Shape {

appearance Appearance {

material Material {diffuseColor 1 1 .85}

}

geometry Box {size 1 1 1}

}

]

scale 90 2 70

translation 0 80 32

},

DEF PAGE1 Transform {

children [

USE PAGE

]

scale 90 2 60

translation 0 83 27

}

]

}

]

},

DEF DRAWER1 Transform {

children [

DEF TS0 TouchSensor {}

USE DRAWER

]

translation 0 0 -85

},

DEF DRAWER2 Transform {

children [

DEF TS2 TouchSensor {}

USE DRAWER

]

translation 0 0 85

},

Transform {

children USE DRAWER

scale 1.15 1.25 2.8

translation 0 -100 40

rotation 1 0 0 -1.57

}

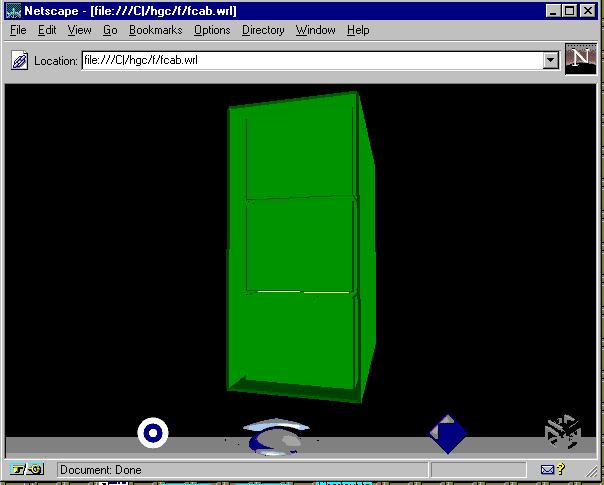

The VRML 2 file in Listing 1.1 creates the geometry and material of each cabinet, drawer, and folder and places them in three dimensional space. Through the TouchSensor node, the VRML also creates an awareness of the users' pointing device in relation to each object that contains the TouchSensor. For example, for those users using a mouse to interact with the VRML scene, clicking on the first drawer activates the TouchSensor node defined as TS1. The VRML 2 file itself can also contain an event handling routine for each TouchSensor, but through an Application Programming Interface (API) into another programming language, the event can be handled by a separate routine written in programming source code. In the case of this simple file cabinet, an active TouchSensor for a drawer opens the drawer if the drawer is currently closed. Conversely, it will close the drawer if the drawer is open. TouchSensors for folders work the same, simply opening each folder in a location where the contents can easily be viewed and then closing them again. The file cabinet contains HTML documents. The manner in which a selected HTML document is presented to the audience varies based on the method chosen by the VRML author. Currently, an author can use one of three technologies to interface to HTML information from a VRML scene: use a web browser plug-in architecture, use the HTML frame technology, or use a VRML 2 API to another programming language. The next three sections cover how the technology works for each option Then, the rest of the paper discusses a better option for handling HTML in three dimensions based on considering VRML a superset of HTML.

In the VRML file cabinet example, a hyperlink can be associated with a VRML object by embedding the object's Transform node inside an Anchor node. For example, to activate a link in a file folder, the folder could be embedded as follows (note that in this case, a simple relative URL is passed to the browser for retrieval upon activation of the Anchor):

Anchor {

children [

url ["example.htm"]

DEF FOLDER Transform {

-- see Listing 1.1 for details --

}

]

}

When the user interacts with a child object in the Anchor node, the plug-in makes a request to the browser to load the file associated with its url field. In the case of a URL specifying an HTML document, the browser then loads the HTML document in the same browser window, but on top of the VRML scene.

Plug-in technology takes advantage of a web browser's ability to request and receive files from web servers around the world. The plug-in need only know how to make the request to the browser and then the browser does the rest. This provides the benefit of minimizing computer resources as the code to perform web navigation is put in RAM or hard drive memory once by the web browser. The navigation and load routines can be optimized within the browser and the plug-in can take advantage of the latest improvements on an ongoing basis. Browsers use many sophisticated routines to optimize system resource management. For example, under the guidance of the web browser, a file can be loaded in the background or integrated into the current browser window.

To create a VRML plug-in, the plug-in developer utilizes two object-oriented classes provided to the plug-in developer by the browser developer. One class sets up communications from the plug-in to the browser and the other the browser to the plug-in. Then, each time the plug-in wants the browser's services, it runs the appropriate routines such as: UserAgent, Version, GetURL, GetURLNotify, and RequestRead. The browser then can communicate with the plug-in through routines such as: Initialize, New, SetWindow, NewStream, WriteReady, Write, Destroy, Shutdown [4].

Disadvantages associated with just using the plug-in technology are the lack of a centralized source of control and the lack of security over accessed files [11]. The browser continually loads files on top of files in the form of a simple stack. There is little control over this behavior from the standpoint of the user.

For the VRML file cabinet example using frames, the Anchor node is enhanced to load its url field in a different frame as follows:

Anchor {

children [

url ["example.htm"]

parameter ["target=HTML"]

DEF FOLDER Transform {

-- see Listing 1.1 for details --

}

]

}

The Anchor node has a parameter field that can be set to a string value of "target=HTML" to identify the HTML frame as its presentation destination. The frames are created through use of a HTML Frame Definition Document [3], the source of which is provided in Listing 1.2. Figure 1.2 shows the frame technology example for a VRML file cabinet. The VRML frame is presented on the left side of the browser window and the HTML frame is presented on the right side. Interactions with folders in the left frame change the contents of the right frame.

Of interest has been the critical response to frames by web traditionalists. Tim Berners-Lee's original design of the web was based on the page as the atomic unit of information delivery. The URL addressing scheme provides a one to one correspondence of address to page. With frames, a single URL points to a browser window of information that is comprised of multiple pages. Since there is no saved state information associated with a web bookmark, bookmarks become less exact when pointing to a frame definition document and inexperienced web navigators are more likely to get lost or encounter unexpected results when using a web browser to navigate a web site using frames [14].

The Java API allows Java variables to be initialized with pointers to VRML nodes. Java is a powerful object-oriented programming language with a comprehensive API development kit [10]. Using Java, a variable within a computer program can be initialized to point to a Billboard node in a VRML file. Within a computer program, the Java variable can change the location of the Billboard in 3D space on the fly, and the VRML viewer will continually update the changes in the 3D world. In this manner, an HTML document's contents can be inserted on a Billboard (using a flat rectangular polygon as a backdrop) and experienced by the user following the behavior coded in the Java program [2]. This method has the flexibility to move the HTML document around in 3D space while maintaining a perpendicular angle to the user. Yet, if the perpendicular attribute is not desired, the Billboard node need not be used. In that case, the HTML document's contents can remain in a fixed 3D-space location without the Billboard and the user can move to view the document at any angle desired. In the VRML file cabinet example, the VRML file can be changed as follows to take advantage of a billboarded HTML document:

DEF FOLDER Transform {

children [

DEF TS3 TouchSensor {}

DEF PAGE0 Transform {

-- see Listing 1.1 for details--

},

DEF PAGE1 Transform {

-- see Listing 1.1 for details --

},

DEF HTML_DOC Transform {

Billboard {

children [

Shape {

appearance Appearance {

texture ImageTexture {

url "html_bitmap.jpg"

}

}

geometry IndexedFaceSet {

coord Coordinate {

point [ -1 -1 0, 1 -1 0, 1 1 0, -1 1 0 ]

}

coordIndex [ 0, 1, 2, 3 ]

}

}

]

}

}

]

}

Currently, to use the API technology described above, each HTML document has to be converted to a bitmap representation (called an image map in VRML vernacular) in order to be displayed in a VRML scene. Current bitmap scaling algorithms used in VRML viewers are not the greatest and legibility is lost for the sake of presentation flexibility and rendering speed. The user can not control the HTML document as she could using the plug-in or frame technologies. The document is no longer loaded as an HTML MIME data type in the web browser, but a 3D-space presentation is gained.

Still, HTML document perusal is afforded some handy features by the web browser. A reader can scroll, turn images on or off, or click on hyperlinks to go elsewhere. These features just are not available to a reader of an HTML-based image map referenced in a VRML file. Why not? I propose to demonstrate how compatible HTML would be as a subset of the VRML standard. Considered as a subset of VRML, HTML documents could still retain their popular scrolling, linking, and imaging features, yet still be included in three dimensional space for a more natural organization and use. The rest of this paper will build that vision and provide examples of how it could work.

could easily be translated to look like the following VRML syntax structure:

HTML {

Head {

title [Example HTML Document]

}

Body {

children [

h2 [Example HTML Document]

Text {

This is the body of the text using one B{ bold [boldfaced]} word

}

]

}

}

The VRML parser could be extended to handle a whole new set of nodes created specifically to retain current HTML document functionality. I will present 8 new fields that could improve the ability to coexist HTML and VRML in a VRML scene.

Shape {

appearance Appearance {

html HTML {

Head {

title [Example HTML Document]

}

Body {

children [

h2 [Example HTML Document]

Text {

This is the body of the text using one B{ bold [boldfaced]} word

}

]

}

}

}

geometry DEF IFS IndexedFaceSet {

coord Coordinate {

point [ -1 -1 0, 1 -1 0, 1 1 0, -1 1 0 ]

}

coordIndex [ 0, 1, 2, 3 ]

}

}

puts the example HTML document on a square polygon defined in the geometry field included in the same Shape node.

Shape {

appearance Appearance {

html HTMLurl {

url ["example.htm"]

}

}

geometry DEF IFS IndexedFaceSet {

coord Coordinate {

point [ -1 -1 0, 1 -1 0, 1 1 0, -1 1 0 ]

}

coordIndex [ 0, 1, 2, 3 ]

}

}

This example is the same as the HTML node example, except with the added benefit of manageability of component objects.

Shape {

appearance Appearance {

html HTMLurl {

url ["example.htm"]

HTMLstring "HTML Example"

}

}

geometry DEF IFS IndexedFaceSet {

coord Coordinate {

point [ -1 -1 0, 1 -1 0, 1 1 0, -1 1 0 ]

}

coordIndex [ 0, 1, 2, 3 ]

}

}

In this example, at a certain distance from the current viewpoint, the string "HTML example" would be displayed by the viewer instead of the full HTML document. More sophisticated rules could be assigned to the HTML and HTMLurl fields such that a string might be taken from the title of the HTML document by default. In that case, the HTMLstring field would be an opportunity to override the default behavior.

An HTMLstringType field could enumerate different behaviors for tying the user's actions to the browser's presentation. An example of using the HTMLstringType would be the following:

Shape {

appearance Appearance {

html HTMLurl {

url ["example.htm"]

HTMLstring "HTML Example"

HTMLstringType "STATUS"

}

}

geometry DEF IFS IndexedFaceSet {

coord Coordinate {

point [ -1 -1 0, 1 -1 0, 1 1 0, -1 1 0 ]

}

coordIndex [ 0, 1, 2, 3 ]

}

}

The "STATUS" keyword would tell the browser to show the HTMLstring string on the status bar at great distances. Common values for the HTMLstringTypes could be identified as part of the VRML specification.

Shape { appearance Appearance { html HTMLurl { url ["example.htm"] HTMLstring "HTML Example" HTMLstringType "ABOVE" HTMLstringSize .2 } } geometry DEF IFS IndexedFaceSet { coord Coordinate { point [ -1 -1 0, 1 -1 0, 1 1 0, -1 1 0 ] } coordIndex [ 0, 1, 2, 3 ] } }

In this example, the HTMLstring string would cover 20% of the horizontal width of the field of view. Of course, other HTMLstring related fields could be derived to determine string color, orientation, duration, etc. The HTMLstringSize is just an example of one of those fields.

Shape {

appearance Appearance {

html HTMLurl {

url ["example.htm"]

HTMLstring "HTML Example"

HTMLactiveType "TOUCH"

}

}

geometry DEF IFS IndexedFaceSet {

coord Coordinate {

point [ -1 -1 0, 1 -1 0, 1 1 0, -1 1 0 ]

}

coordIndex [ 0, 1, 2, 3 ]

}

}

In this example, the HTMLactiveType is set to "TOUCH" which would allow the user to make it active as she would any TouchSensor in the world. For desktop VR systems of today, TouchSensors are usually activated through a mouse click. Immersive systems use the touch of a virtual hand. Other example enumerated possibilities for the HTMLactiveType field would be "DRAG", "OVER", and "PROXIMITY". The "DRAG" value would require the user to move the geometry with the mouse, the "OVER" value would make it active upon the mouse passing over the geometry, and the "PROXIMITY" value would activate the HTML full screen when the user was within a certain distance. Note that a similar field could identify how the HTML document would be de-activated and put back within its Shape node's geometry.

Shape {

appearance Appearance {

html HTMLurl {

url ["example.htm"]

HTMLstring "HTML Example"

HTMLactiveType "TOUCH"

HTMLactiveSize .4

}

}

geometry DEF IFS IndexedFaceSet {

coord Coordinate {

point [ -1 -1 0, 1 -1 0, 1 1 0, -1 1 0 ]

}

coordIndex [ 0, 1, 2, 3 ]

}

}

only 40% of the screen would be covered by an active HTML document.

Shape {

appearance Appearance {

html HTMLurl {

url ["example.htm"]

HTMLstring "HTML Example"

HTMLactiveType "TOUCH"

HTMLparameters ["size=.4"]

}

}

geometry DEF IFS IndexedFaceSet {

coord Coordinate {

point [ -1 -1 0, 1 -1 0, 1 1 0, -1 1 0 ]

}

coordIndex [ 0, 1, 2, 3 ]

}

}

It is this author's belief that a 3D organization of text material is more natural than the current HTML search engine methods afforded by Digital's Alta Vista or Yahoo's Yahoo search engines. These search engines produce one dimensional lists of links to HTML documents related to a requested topic. Currently, Web navigators access HTML documents through URLs (Universal Resource Locators) which are not translatable into any 3D location. Web travelers using URLs to locate documents feel more like discontinuous time travelers than geographical travelers. Yet, outside of the web, human beings travel on foot, by car, or by plane over a continuous landscape. This continuity helps people remember where things are in the universe. It helps everyone navigate daily in a logical manner. A web traveler visiting a 3D virtual library can walk among the book stacks as in the real world. She can navigate within a specific aisle to find a book of interest. She may find an interesting book she was not aware of but stumbled upon because of its neighbors in three dimensional space.

More research can be done as to which metaphor most easily helps information searchers find the information they desire. Once a flexible and scaleable metaphor is identified, all web page authors can work to insert their documents into a global, three dimensional space. The tools that are being created to assist in authoring VRML worlds should help web authors insert HTML documents into an organized cyberspace of information combining both text and three dimensional information should our planet ever get together to create an organized cyberspace.

[2] Cornell, Gary and Horstmann, Cay S., Core Java, SunSoft Press, Mountain View, CA (1997) [Highly technical overview of popular Java APIs available for use by Java developers. Overview of the design specifications of the Java language as well as implications on Object Oriented Programming styles.]

[3] Lemay, Laura, Teach Yourself Web Publishing With HTML 3.2 in 14 Days Professional Reference Edition, Sams.net Publishing, Indianapolis, IN (1996) [The bible of HTML technical references. Excellent overview of Frames, JavaScript and Java implications on web publishing. Lots of examples of use of HTML syntax.]

[4] Morgan, Mike, Netscape Plug-Ins Developer's Kit, MacMillan Computer Publishing, Indianapolis, IN (1996) [ Technical reference on creating plug-in applications within Netscape Navigators' class hierarchy and application programming interface. Overview of the benefits of the plug-in architecture with examples of creating various types of plug-in applications.]

[5] Netscape Corporation, Frames Page (Accessed 4 December 1996) [Overview of using frames within HTML. Description of the process along with the benefits of using frames.]

[6] Netscape Corporation, Java API For Frames Overview Page (Accessed 4 December 1996) [Overview of using the Java API within frames on a web page. Description of the API functionality along with examples of the messaging structure between Java, JavaScript and the contents of a frame.]

[7] Netscape Corporation, Plug-Ins Home Page (Accessed 4 December 1996) [Overview of the plug-in environment with examples of the communication between plug-ins and Netscape Navigator. Examples of plug-ins available for download.]

[8] San Diego Supercomputer Center, VRML Repository Home Page (Accessed 12 September 1996) [Web site detailing the latest information available on VRML. Links to web sites incorporating VRML, tutorials about VRML, and opinions on the proper use of VRML.]

[9] Silicon Graphics Inc., Java/VRML API Page (Accessed 2 December 1996) [Technical specification for a standard VRML 2.0 Java API implemented in version 2 of the CosmoPlayer VRML viewer available from SGI. Overview of the function and syntax of the function calls available.]

[10] Sun Microsystems Inc., Java Developers Kit Index Page (Accessed 12 September 1996) [Technical overview of the Java Developers Kit application programming interface (API). Discussion of available classes, methods, and appropriate usage including syntax and parameter lists.]

[11] Turlington, Shannon, Official Netscape Plug-in Book, Netscape Press, Research Triangle Park, NC (1996) [Overview of Netscape Navigator plug-ins including disadvantages of the plug-in architecture and lack of central control point. Examples of good plug-in design concepts and available plug-in products.]

[12] VRML Architecture Group, VRML 2 Final Specification (Accessed 2 December 1996) [The final specification of VRML 2.0 from the organization responsible for its maintenance. Includes design considerations, overview of the purpose of VRML, complete node and field syntax and examples of appropriate use.]

[13] World Wide Web Consortium, HTML Guidelines Page (Accessed 21 December 1996) [The final specification of HTML 2.0 with comment as to the status of HTML 3.2 from the organization responsible for its maintenance. Links to many articles and papers defining and challenging the HTML standard.]

[14] Jakob Nielsen, Why Frames Suck (Most of the Time) (Accessed 6 March 1997)